Custom Knowledge Retrieval Pipeline with LLMs

for Question Answering Tasks

for Question Answering Tasks

CS 496: Deep Generative Models Spring 2023 w/ Prof. Bryan Pardo

Marco Wang | Shaobo Zhang

marcowang2024@u.northwestern.edu | shaobozhang2024@u.northwestern.edu

Motivation

We both wanted to experiment with Large Language Models (LLMs) and their capabilities to interact with our own custom datasets. Some ideas we had were to create a virtual representation of ourselves using a LLM-based conversation agent, or to create a LLM-augmented knowledge base for our private notes and documents. While retrieval-augmented LLMs are not new, there are very few exisiting implementations that are practical for our uses cases and are cost-effective.

On a practical note, retrieval-augmented LLMs allow LLMs to generate responses based on custom domain-specific data without the need to perform parameter finetuning, which can often be expensive. This is particularly beneficial for cases where the data is private or is updated frequently. Overall, through this project we hope to learn more about the knowledge retrieval using LLMs, in-context question answering and prompt engineering.

On a practical note, retrieval-augmented LLMs allow LLMs to generate responses based on custom domain-specific data without the need to perform parameter finetuning, which can often be expensive. This is particularly beneficial for cases where the data is private or is updated frequently. Overall, through this project we hope to learn more about the knowledge retrieval using LLMs, in-context question answering and prompt engineering.

Vector Database

A core part of our pipeline is the vector database (vectorDB), which we will introduce here breifly. In short, A vectorDB is a type of databse that specializes in handling embeddings. Embeddings, in the context of language models, are mathematical representations of text where words or phrases with similar meanings are mapped to similar positions in a high-dimensional space. VectorDB are simply databases provide optimized storage and querying capabilities for embeddings. There are many use cases of vectorDBs such as semantic search and long term memory for conversation agents. In our case, we use it for knowledge retrieval. For a more in depth explanation of vectorDBs, check out this article by Pinecone.

Architecture

Our pipeline consists fo three main components, a vectorDB, a LLM and the client interface. For the vectorDB, we use chromaDB, which is an open source vectorDB. The database is hosted on a serverless container using modal. For the embedding model, we are using instructor-xl, which is an instruction fine tuned embedding model based on a GTR model. The emebdding model is also hosted on modal alongside the database. For the LLM, we use a proxy-based API of OpenAI's ChatGPT 3.5 turbo model. This API essentially allows us to use chatGPT for free with a daily rate limit. The rate limit is similar to using chatGPT directly through the browser, which is more than enough for our use case.

For ingestion, we first use document loaders from langchain to turn files stored on disk into text. The text are then split into chunks of 1000-4000 characters (depending on the specific use case) with 200 characters of overlap. These chunks of text are then fed into the embedding model to generate embeddings. The embeddings are then stored in the vectorDB.

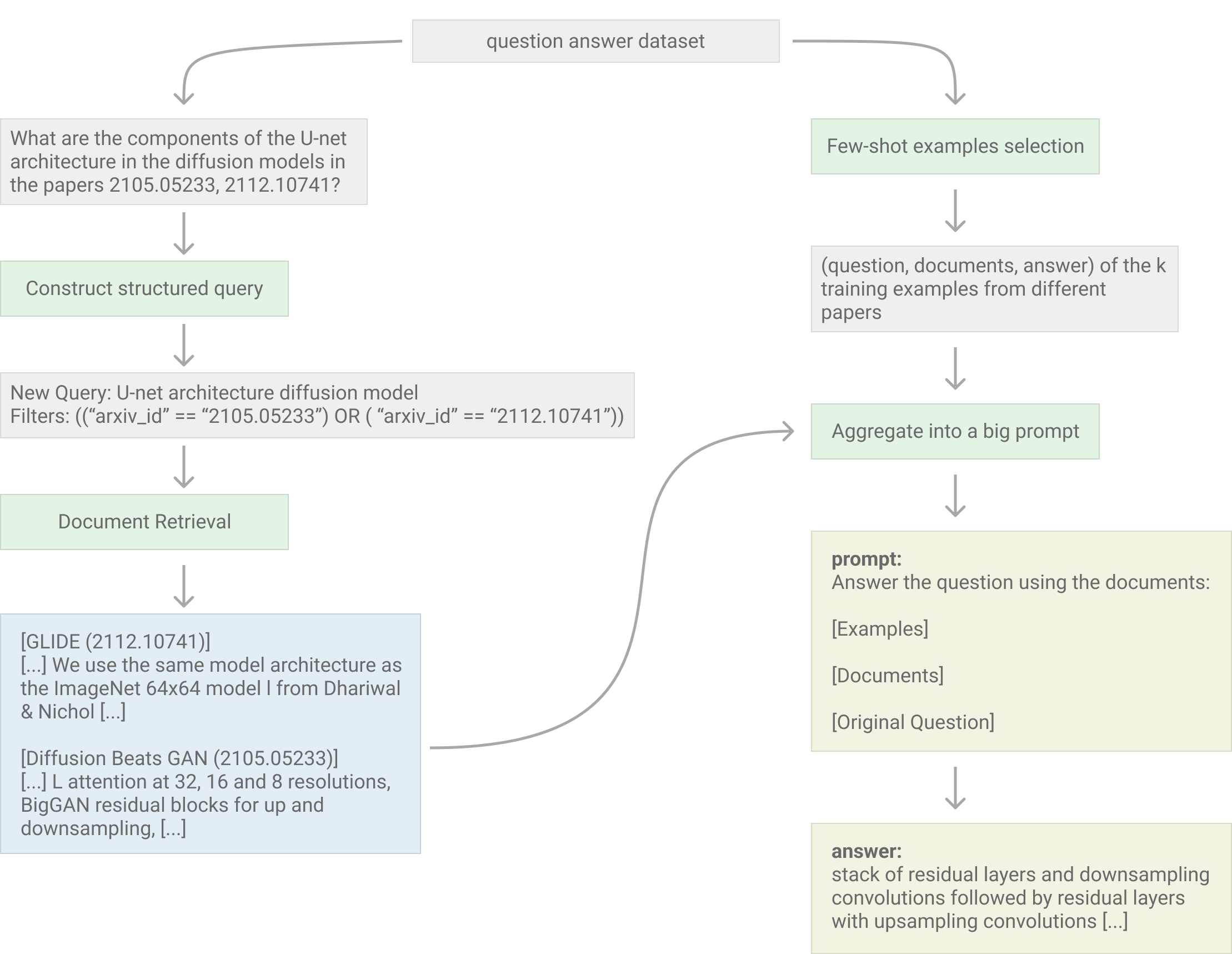

For querying,

For ingestion, we first use document loaders from langchain to turn files stored on disk into text. The text are then split into chunks of 1000-4000 characters (depending on the specific use case) with 200 characters of overlap. These chunks of text are then fed into the embedding model to generate embeddings. The embeddings are then stored in the vectorDB.

For querying,

- we first pass the query to the LLM to generate a more concise structured query that is more suitable for the vectorDB.

- The structured query is then passed to the embedding model to generate an embedding of the query.

- The embedding is then passed to the vectorDB to retrieve the top k most revelant documents.

- Our pipeline will also generate k-random examples from the training data to be used as few-shot examples.

- The documents are then combined with the few-shot examples and original query to form a large propmt.

- This prompt is then passed into the LLM for the final time to generate the final response.

Figure 1. Pipeline Architecture

Figure 2. Pipeline Data Flow

QASPER

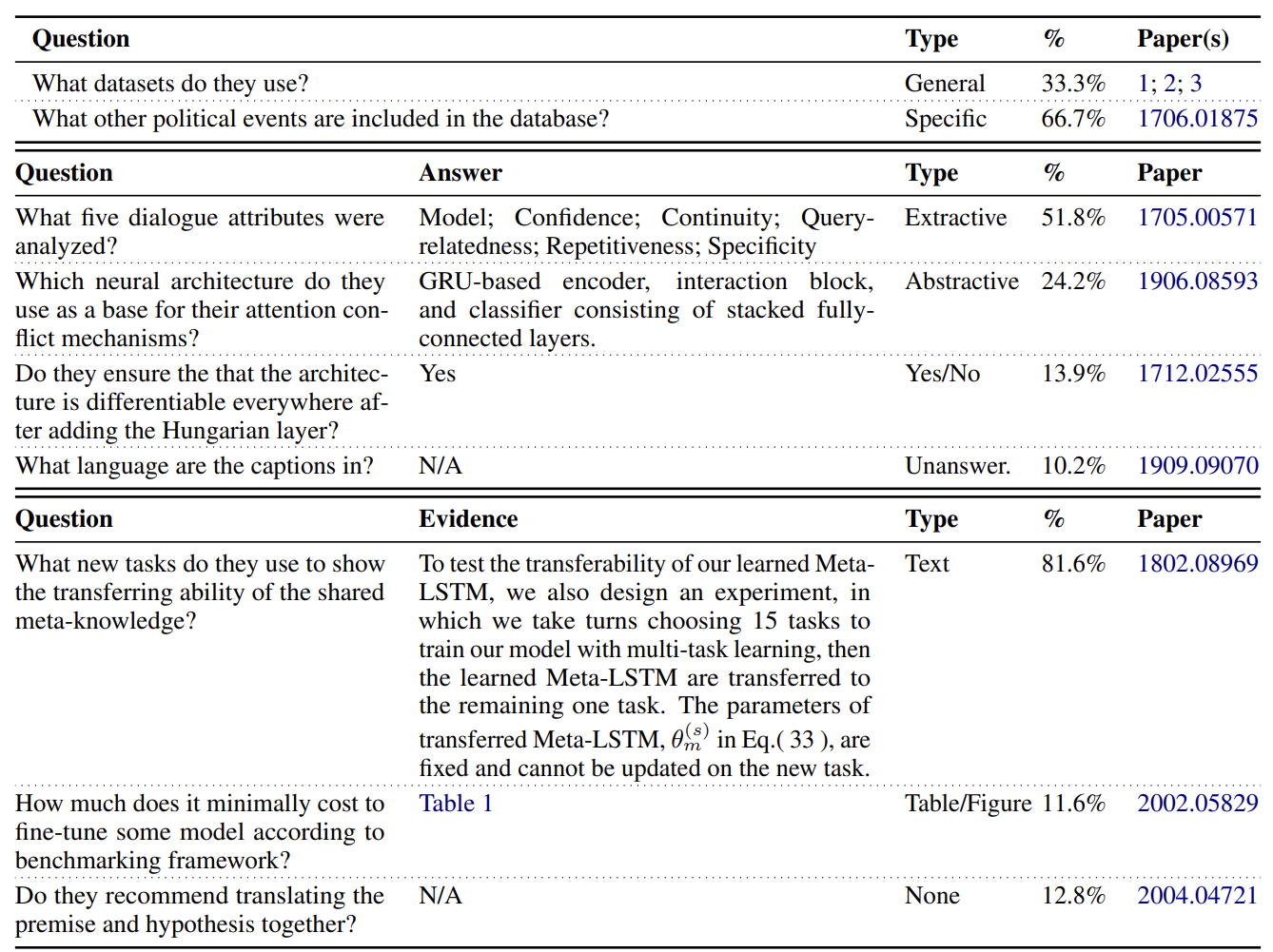

We use the QASPER dataset to evaluate the performance of our pipeline. QASPER is a dataset of 5,49 question over 1585 natural langauge processing (NLP) papers. Each question is written by an NLP practioner who only reads the abstract and title of the paper. The questions are then answered by a seperate set of NLP practioners who have read the entire paper. The dataset consists of 4 types of answers: yes/no, extractive, abstractive, and unanswerable. This is illustrated in the table below. For more details about the dataset, check out the original paper here.

Figure 3. Examples of questions, answers, and evidence sampled from QASPER. Credits to the original paper.

Evaluation

For evaluation, we run three different versions of our pipeline over the test set of the QASPER dataset. The test set contains around 1500 questions over around 400 different papers. We chose the smaller-sized test set, as opposed to the training set since due to limitations in the rate limit of the API. We use the evaluation script provided by the original paper to evaluate our results. The three versions of our pipeline are:

- LLM + 6 few-shot examples

- LLM + 6 documents from vectorDB

- LLM + 6 documents from vectorDB + 6 few-shot examples

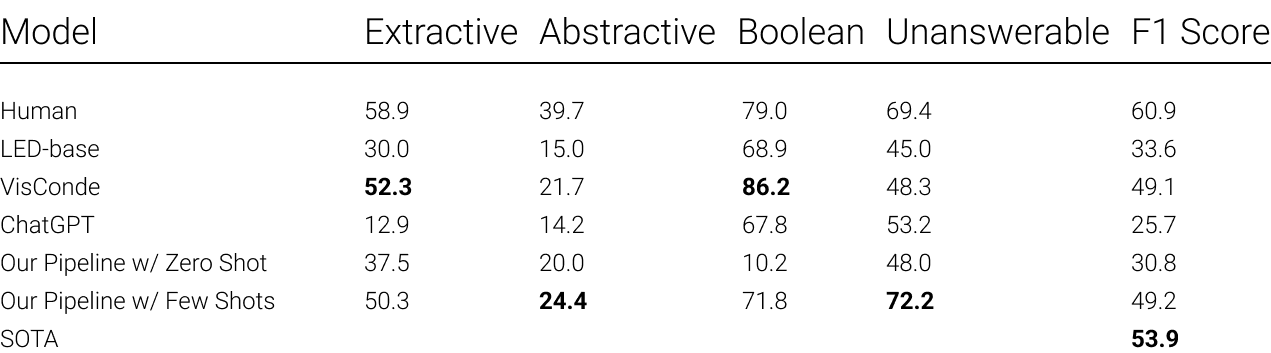

Figure 4. Results from our experiments compared to results from other papers.

Results

Based on the F1 similarity test reported by different papere and the test scripts we have ran on our pipeline and chatGPT, we have created the table below. The LED-base score is provided in the QASPER paper, while the VisConde score is provided in the VidConde paper. The SOTA score we are using in the table is the score of the CoLT5 model, which is currently the highest on the leaderboard as of June 1st 2023. Then we have ChatGPT's score with few shot examples, and the score of our pipeline with and without few shot examples as inputed prompt.

As we can see our pipeline out performs the VisConde model by just a little and have significant improvement against both the ChatGPT and LED-base score. Moreover, it is relatively close to the SOTA model, which is finetuned and trained with a lot of computation resources. Therefore, we are quite satisfied with our result.

Looking closer into each category, we can see that our pipeline performs similarily to the VidConde model at extracting information, which is both a little bit short of human testers. We can see that abstraction tasks are in general really hard for every model and our pipeline is still quite far from the human abstraction score. We also note that our pipeline underperforms in answering yes/no questions compared to both humans and the VisConde model, which means it is not nearly as good at understanding the material context as the other two. Lastly, we see that our pipeline performs significantly better than every other model at answering unanswerable questions, which means it hallucinates a lot less than those models. This is extremely important as a big part of the goal of our project is to make the LLM hallucinate as little as possible.

All in all, we are satisfied with the result our pipeline has achieved and we hope to better improve the abstractive ability of our pipeline down the line for further developement. Another note we'd like to make here is that our pipeline with out few shots prompting performs significantly worse because it does not answer the question in the correct format. For example, it would answer a yes/no question like "yes, because ...", while the result should only have yes or no for better F1 similarity score. Therefore, adding the few shot example when prompting significantly improved the performance of our pipeline.

As we can see our pipeline out performs the VisConde model by just a little and have significant improvement against both the ChatGPT and LED-base score. Moreover, it is relatively close to the SOTA model, which is finetuned and trained with a lot of computation resources. Therefore, we are quite satisfied with our result.

Looking closer into each category, we can see that our pipeline performs similarily to the VidConde model at extracting information, which is both a little bit short of human testers. We can see that abstraction tasks are in general really hard for every model and our pipeline is still quite far from the human abstraction score. We also note that our pipeline underperforms in answering yes/no questions compared to both humans and the VisConde model, which means it is not nearly as good at understanding the material context as the other two. Lastly, we see that our pipeline performs significantly better than every other model at answering unanswerable questions, which means it hallucinates a lot less than those models. This is extremely important as a big part of the goal of our project is to make the LLM hallucinate as little as possible.

All in all, we are satisfied with the result our pipeline has achieved and we hope to better improve the abstractive ability of our pipeline down the line for further developement. Another note we'd like to make here is that our pipeline with out few shots prompting performs significantly worse because it does not answer the question in the correct format. For example, it would answer a yes/no question like "yes, because ...", while the result should only have yes or no for better F1 similarity score. Therefore, adding the few shot example when prompting significantly improved the performance of our pipeline.

limitations

Although we have achieved a decent performance, the project still has some limitations. Firstly, it cannot accommodate text inputs that exceed the context window size, which restricts the complexity and depth of the data we can process. Secondly, the system struggles to answer abstractive questions that necessitate reasoning with extensive context, limiting its problem-solving capability in certain scenarios. We can see that this is a common trend among every model and even is the worst category humans are performing in. Additionally, the employed similarity search method is imperfect and could overlook important context or provide irrelevant documents, impacting the accuracy and relevance of responses. Finally, we are using ChatGPT instead of our proprietary Large Language Model (LLM), which might limit our customization ability and overall control over the system. Specifically, we do not possess the ability to finetune the model unless we switch to an open source model and run it on our own server after fine tuning.

next steps

To build on our current project, we aim to run our own Large Language Model (LLM) on our own server to solve the rate limit and privacy issue we have right now using api calls. Moreover, we would like to use this pipeline in production with the project ideas we've mentioned in the motivation section, which would allow us to identify potential issues when this pipeline is deployed in different problem spaces. We would also like to tune our parameters such as the chunk sizes when processing the knowledge base to optimize for our performace (specifically the abstraction field). Lastly, we'd also like to use the 'chain of thoughts' method in prompting by integrating generated explanations from the examples used in our few-shot learning approach into the database. According to our research, this method has been proven effective in many papers when trying to improve the performance of a LLM.

Note for Professor Pardo

Hello from Marco and Shaobo. Thank you reading through our project and for a great quarter. We have both thoroughly enjoyed your class. And this is definitely the CS class where we were able to learn the most from out of all the CS classes we have taken so far. We hope you have a great summer. FYI, we recently resubmitted our updated slides to canvas, sorry about completely forgetting about this. We hope we can still get credit for that.

Sources

- langchain

- A Dataset of Information-Seeking Questions and Answers Anchored in Research Papers

- Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

- CoLT5: Faster Long-Range Transformers with Conditional Computation

- ChatGPT API FREE Reverse Proxy

- Chroma - the open-source embedding database.

- Modal: End-to-end cloud compute

- instructor-xl

Example outputs

As a demo, we embedded around 10 papers related to the GLIDE paper and diffusion models. Here are some example outputs from our pipeline.

Question: What are the components of the u-net architecture used in diffusion models?

Answer: One component used in a U-Net architecture for diffusion models is self-attention layers, which have become important in recent literature. Another component is residual connections. The U-Net incorporates downsampling and upsampling to operate on larger spatial scales for increased computational efficiency, and features long-range skip-connections. The network is grouped into sequences of six layers, with most sequences separated by downsampling or upsampling.

Question: Why is classifier free guidance better than classifier-guided diffusion for the GLIDE model?

Answer: Classifer-free guidance is better than classifier-guided diffusion for the GLIDE model because it simplifies the model training pipeline and does not require training a separate classifier, which can reduce the need for noisy data. Additionally, classifer-free guidance allows a single model to leverage its own knowledge during guidance, which can improve sample quality. Finally, classifer-free guidance can avoid issues related to decreased diversity that can arise from using classifier-guided diffusion.

Question: What is adaptive group normalization?

Answer: Adaptive Group Normalization (AdaGN) is a layer used in deep learning architectures that incorporates the timestep and class embedding into each residual block after a group normalization operation, similar to adaptive instance normalization and FiLM. It is used to condition diffusion models on class labels and has been found to improve sample quality.

Question: What are the core results from the paper (2112.10741)?

Answer: The core results from the paper "GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models" include the exploration of text-conditional image synthesis using diffusion models and two different guidance strategies (CLIP guidance and classifier-free guidance), the demonstration of fine-tuning the model to perform image inpainting and text-driven image editing, and the comparison of their model"s performance with other state-of-the-art text-conditional image generation models, showing superior results in terms of photorealism and caption similarity. Additionally, the paper addresses safety considerations and proposes filtering training images to reduce potential harm in disinformation or biased images.

Question: What are the components of the u-net architecture used in diffusion models?

Answer: One component used in a U-Net architecture for diffusion models is self-attention layers, which have become important in recent literature. Another component is residual connections. The U-Net incorporates downsampling and upsampling to operate on larger spatial scales for increased computational efficiency, and features long-range skip-connections. The network is grouped into sequences of six layers, with most sequences separated by downsampling or upsampling.

Question: Why is classifier free guidance better than classifier-guided diffusion for the GLIDE model?

Answer: Classifer-free guidance is better than classifier-guided diffusion for the GLIDE model because it simplifies the model training pipeline and does not require training a separate classifier, which can reduce the need for noisy data. Additionally, classifer-free guidance allows a single model to leverage its own knowledge during guidance, which can improve sample quality. Finally, classifer-free guidance can avoid issues related to decreased diversity that can arise from using classifier-guided diffusion.

Question: What is adaptive group normalization?

Answer: Adaptive Group Normalization (AdaGN) is a layer used in deep learning architectures that incorporates the timestep and class embedding into each residual block after a group normalization operation, similar to adaptive instance normalization and FiLM. It is used to condition diffusion models on class labels and has been found to improve sample quality.

Question: What are the core results from the paper (2112.10741)?

Answer: The core results from the paper "GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models" include the exploration of text-conditional image synthesis using diffusion models and two different guidance strategies (CLIP guidance and classifier-free guidance), the demonstration of fine-tuning the model to perform image inpainting and text-driven image editing, and the comparison of their model"s performance with other state-of-the-art text-conditional image generation models, showing superior results in terms of photorealism and caption similarity. Additionally, the paper addresses safety considerations and proposes filtering training images to reduce potential harm in disinformation or biased images.